In which I discover how Avahi slowed down ssh

This page, at the time of writing, is served by a virtual machine running at linode. Nothing special: just Debian 6. The node is more than just an Apache server: I connect to it via ssh a lot (most often indirectly, with Git).

As long as I can remember, there’s been a noticeable delay in establishing an ssh connection. I’ve always written this off as one of the side-effects of living so far away from the server; but the delay is present even when I’m in the US, and doesn’t affect latency as much once the connection is established.

Tonight, I decided to find out why.

First, I started a parallel instance of sshd on my VM, running on an

alternate port.

# /usr/sbin/sshd -ddd -p 2222

Then I connected to this port from my local system.

$ ssh -vvv ln1.civilfritz.net -p 2222

I watched the debug output in both terminals, watching for any obvious delay. On the server, that delay was preceded by a particular log entry.

debug3: Trying to reverse map address 109.171.130.234.

The log on the client was less damning: just a reference to checking the assumable locations for ssh keys.

debug2: key: /Users/janderson/.ssh/id_ecdsa (0x0)

I confirmed that the address referenced by the server was one of the

external NAT addresses used by my

ISP. Presumably

sshd or PAM is trying to determine a name for use in authorization

assertions.

At that point, I set out to understand why there was a delay reverse-mapping the address. Unsurprisingly, KAUST hasn’t populated reverse-dns for the address.

$ time host 109.171.130.234 Host 234.130.171.109.in-addr.arpa. not found: 3(NXDOMAIN) real 0m0.010s user 0m0.004s sys 0m0.005s

That said, getting the confirmation that the reverse-dns was unpopulated did not take the seconds that were seen as a delay establishing an ssh connection.

I had to go… deeper.

# strace -t /usr/sbin/sshd -d -p 2222

Here I invoke the ssh server with a system call trace. I included timestamps in the trace output so I could more easily locate the delay, but I was able to see it in real-time.

00:27:44 socket(PF_FILE, SOCK_STREAM, 0) = 4

00:27:44 fcntl64(4, F_GETFD) = 0

00:27:44 fcntl64(4, F_SETFD, FD_CLOEXEC) = 0

00:27:44 connect(4, {sa_family=AF_FILE, path="/var/run/avahi-daemon/socket"}, 110) = 0

00:27:44 fcntl64(4, F_GETFL) = 0x2 (flags O_RDWR)

00:27:44 fstat64(4, {st_mode=S_IFSOCK|0777, st_size=0, ...}) = 0

00:27:44 mmap2(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0xb7698000

00:27:44 _llseek(4, 0, 0xbf88eb18, SEEK_CUR) = -1 ESPIPE (Illegal seek)

00:27:44 write(4, "RESOLVE-ADDRESS 109.171.130.234\n", 32) = 32

00:27:44 read(4, "-15 Timeout reached\n", 4096) = 20

00:27:49 close(4) = 0

That’s right: a five second delay. The horror!

Pedantic troubleshooting aside, the delay is introduced while reading

from /var/run/avahi-daemon/socket. Sure enough, I have

Avahi installed.

$ dpkg --get-selections '*avahi*' avahi-daemon install libavahi-client3 install libavahi-common-data install libavahi-common3 install libavahi-compat-libdnssd1 install libavahi-core7 install

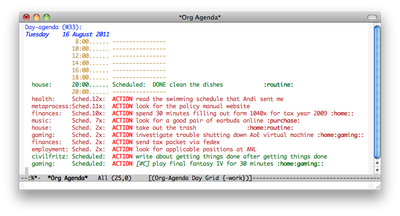

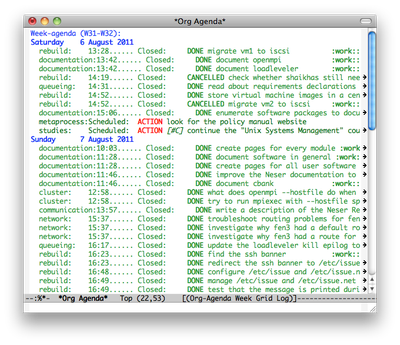

Some research that is difficult to represent here illuminated the fact that Avahi was installed when I installed Mumble. (Mumble might actually be the most-used service on this box, after my use of it to store my Org files. Some friends of mine use it for voice chat in games.)

I briefly toyed with the idea of tracking down the source of the delay in Avahi; but this is a server in a dedicated, remote datacenter. I can’t imagine any use case for an mDNS resolver in this environment, and I’m certainly not using it now.

# service stop avahi-daemon

Now it takes less than three seconds to establish the connection. Not bad for halfway across the world.

$ time ssh janderson@ln1.civilfritz.net -p 2222 /bin/false real 0m2.804s user 0m0.017s sys 0m0.006s

Better yet, there are no obvious pauses in the debugging output on the server.

Avahi hooks into the system name service using nsswitch.conf, just

as you’d expect.

$ grep mdns /etc/nsswitch.conf hosts: files mdns4_minimal [NOTFOUND=return] dns mdns4

Worst case I could simply edit this config file to remove mDNS; but I

wanted to remove it from my system altogether. It should just be

“recommended” by mumble-server, anyway.

# aptitude purge avahi-daemon libnss-mdns

With the relevant packages purged from my system, nsswitch.conf is

modified (automatically) to match.

$ grep hosts: /etc/nsswitch.conf hosts: files dns

Problem solved.