Travails of the KAUST cafeteria

My lunchtime failure, expressed serendipitously via haiku.

My lunchtime failure, expressed serendipitously via haiku.

Finally, brothers, whatever is true, whatever is honorable, whatever is just, whatever is pure, whatever is lovely, whatever is commendable, if there is any excellence, if there is anything worthy of praise, think about these things.

Security is mostly a superstition. It does not exist in nature, nor do the children of men as a whole experience it. Avoiding danger is no safer in the long run than outright exposure. Life is either a daring adventure, or nothing.

So there I was, idly doing some personal sysadmin on my stateside

virtual machine, when I decided, “It’s been a while since I’ve done a

dist-upgrade. I should look at the out-of-date packages.”

apt-get update, and immediately I noticed a problem. It was kind of

obvious, what with it hanging all over my shell and all.

# apt-get update 0% [Connecting to ftp.us.debian.org] [Connecting to security.debian.org] [Connecting to apt.puppetlabs.com]^C

I quickly tracked a few symptoms back to DNS. I couldn’t get a response off of my DNS servers.

$ host google.com ;; connection timed out; no servers could be reached

I use

Linode

for vps hosting, and they provide a series of resolving name servers for

customer use. It seemed apparent to me that the problem wasn’t on my

end–I could still ssh into the box, and access my webserver from the

Internet–so I contacted support about their DNS service not working.

Support was immediately responsive; but, after confirming my vm location, they reported that the nameservers seemed to be working correctly. Further, the tech pointed out that he couldn’t ping my box.

No ping? Nope. So I can’t DNS out, and I can’t ICMP at all. “I have

just rewritten my iptables config, so it’s possible enough that I’ve

screwed something up, there; but, with a default policy of ACCEPT on

OUTPUT, I don’t know what I could have done there to affect this.” I

passed my new config along, hoping that the tech would see somthing

obvious that I had missed. He admitted that it all looked normal, but

that, in the end, he can’t support the configuration I put on my vm.

“For more hands on help with your iptables rules you may want to reach out to the Linode community.”

Boo. Time to take a step back.

I use Puppet to manage iptables. More

specifically, until recently, I have been using

`bobsh/iptables <http://forge.puppetlabs.com/bobsh/iptables>`__, a

Puppet module that models individual rules with a native Puppet type.

iptables

{ 'http':

state => 'NEW',

proto => 'tcp',

dport => '80',

jump => 'ACCEPT',

}

There’s a newer, more official module out now, though:

`puppetlabs/firewall <http://forge.puppetlabs.com/puppetlabs/firewall>`__.

This module basically does the same thing as bobsh/iptables, but

it’s maintained by Puppet Labs and is positioned for eventual

portability to other firewall systems. Plus, whereas bobsh/iptables

concatenates all of its known rules and then replaces any existing

configuration, puppetlabs/firewall manages the tables in-place,

allowing other systems (e.g., fail2ban) to add rules out-of-band

without conflict.

In other words: new hotness.

The porting effort was pretty minimal. Soon, I had replaced all of my rules with the new format.

firewall

{ '100 http':

state => 'NEW',

proto => 'tcp',

dport => '80',

action => 'accept',

}

Not that different. I’m using lexical ordering prefixes now, but I could

have done that before. The big win, though, is the replacement of the

pre and post fixtures with now-explicit pervasive rule order.

file

{ '/etc/puppet/iptables/pre.iptables':

source => 'puppet:///modules/s_iptables/pre.iptables',

owner => 'root',

group => 'root',

mode => '0600',

}

file

{ '/etc/puppet/iptables/post.iptables':

source => 'puppet:///modules/s_iptables/post.iptables',

owner => 'root',

group => 'root',

mode => '0600',

}

See, bobsh/iptables uses a pair of flat files to define a static set

of rule fixtures that should always be present.

# pre.iptables -A INPUT -i lo -j ACCEPT -A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT # post.iptables -A INPUT -j REJECT --reject-with icmp-host-prohibited -A FORWARD -j REJECT --reject-with icmp-host-prohibited

So, in my fixtures, loopback connections were always ACCEPTed, as

were any existing connections flagged by connection tracking. Everything

else (that isn’t allowed by a rule between these fixtures) is REJECTed.

This works well enough, but the flat files are a bit of a hack.

firewall

{ '000 accept localhost':

iniface => 'lo',

action => 'accept',

}

firewall

{ '000 accept tracked connections':

state => ['RELATED', 'ESTABLISHED'],

action => 'accept',

}

firewall

{ '999 default deny (input)':

proto => 'all',

action => 'reject',

}

firewall

{ '999 default deny (forward)':

chain => 'FORWARD',

proto => 'all',

action => 'reject',

reject => 'icmp-host-prohibited',

}

That’s much nicer. (I think so, anyway.) Definitely more flexible.

Anyway: I spent thirty minutes or so porting my existing rules over to

puppetlabs/firewall, with no real problems to speak of. Until, of

course, I realize I can’t query DNS.

What could have possibly changed? The new configuration is basically one-to-one with the old configuration.

:INPUT ACCEPT [0:0] [...] -A INPUT -p tcp -m comment --comment "000 accept tracked connections" -m state --state RELATED,ESTABLISHED -j ACCEPT [...] -A INPUT -m comment --comment "900 default deny (input)" -j REJECT --reject-with icmp-port-unreachable

Oh.

So, it turns out that puppetlabs/firewall has default values. In

particular, proto defaults to tcp. That’s probably the

reasonably pervasive common case, but it was surprising. End result?

That little -p tcp in my connection tracking rule means that

icmp, udp, and anything else other than tcp can’t establish

real connections. The udp response from the DNS server doesn’t get

picked up, so it’s rejected at the end.

The fix: explicitly specifying proto => 'all'.

firewall

{ '000 accept tracked connections':

state => ['RELATED', 'ESTABLISHED'],

proto => 'all',

action => 'accept',

}

Alternatively, I could reconfigure the default; but it’s fair enought

that, as a result, I’d have to explicitly spectify tcp for the

majority of my rules. That’s a lot more verbose in the end.

Firewall

{

proto => 'all',

}

Once again, all is right with the world (or, at least, with RELATED and

ESTABLISHED udp and icmp packets).

At some unknown point, ssh to at least some of our front-end nodes

started spuriously failing when faced with a sufficiently large burst of

traffic (e.g., cating a large file to stdout). I was the only

person complaining about it, though–no other team members, no users–so I

blamed it on something specific to my environment and prioritized it as

an annoyance rather than as a real system problem.

Which is to say: I ignored the problem, hoping it would go away on its own.

Some time later I needed to access our system from elsewhere on the

Internet, and from a particularly poor connection as well. Suddenly I

couldn’t even scp reliably. I returned to the office, determined to

pinpoint a root cause. I did my best to take my vague impression of “a

network problem” and turn it into a repeatable test case, dding a

bunch of /dev/random into a file and scping it to my local

box.

$ scp 10.129.4.32:data Downloads data 0% 1792KB 1.0MB/s - stalled -^CKilled by signal 2. $ scp 10.129.4.32:data Downloads data 0% 256KB 0.0KB/s - stalled -^CKilled by signal 2.

Awesome: it fails from my desktop. I duplicated the failure on my netbook, which I then physically carried into the datacenter. I plugged directly into the DMZ and repeated the test with the same result; but, if I moved my test machine to the INSIDE network, the problem disappeared.

Wonderful! The problem was clearly with the Cisco border switch/firewall, because (a) the problem went away when I bypassed it, and (b) the Cisco switch is Somebody Else’s Problem.

So I told Somebody Else that his Cisco was breaking our ssh. He

checked his logs and claimed he saw nothing obviously wrong (e.g., no

dropped packets, no warnings). He didn’t just punt the problem back at

me, though: he came to my desk and, together, we trawled through some

tcpdump.

15:48:37.752160 IP 10.68.58.2.53760 > 10.129.4.32.ssh: Flags [.], ack 36822, win 65535, options [nop,nop,TS val 511936353 ecr 1751514520], length 0

15:48:37.752169 IP 10.129.4.32.ssh > 10.68.58.2.53760: Flags [.], seq 47766:55974, ack 3670, win 601, options [nop,nop,TS val 1751514521 ecr 511936353], length 8208

15:48:37.752215 IP 10.68.58.2.53760 > 10.129.4.32.ssh: Flags [.], ack 36822, win 65535, options [nop,nop,TS val 511936353 ecr 1751514520,nop,nop,sack 1 {491353276:491354644}], length 0

15:48:37.752240 IP 10.129.4.32 > 10.68.58.2: ICMP host 10.129.4.32 unreachable - admin prohibited, length 72

The sender, 10.129.4.32, was sending an ICMP error back to the

receiver, 10.68.58.2. Niggling memory of this “admin prohibited”

message reminded me about our iptables configuration.

-A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A INPUT -p tcp -m tcp --dport 22 -m state --state NEW -m comment --comment "ssh" -j ACCEPT -A INPUT -j REJECT --reject-with icmp-host-prohibited

iptables rejects any packet that isn’t explicitly allowed or part of

an existing connection with icmp-host-prohibited, exactly as we were

seeing. In particular, it seemed to be rejecting any packet that

contained SACK fields.

When packets are dropped (or arrive out-of-order) in a modern TCP

connection, the receiver sends a SACK message, “ACK X, SACK Y:Z”. The

“selective” acknowledgement indicates that segments between X and Y are

missing, but allows later segments to be acknowledged out-of-order,

avoiding unnecessary retransmission of already-received segments. For

some reason, such segments were not being identified by iptables as

part of the ESTABLISHED connection.

A Red Hat bugzilla

indicated that you should solve this problem by disabling SACK. That

seems pretty stupid to me, though, so I went looking around in the

iptables documentation in

stead. A netfilter patch

seemed to indicate that iptables connection tracking should

support SACK, so I contacted the author–a wonderful gentleman named

Jozsef Kadlecsik–who confirmed that SACK should be totally fine

passing through iptables in our kernel version. In stead, he

indicated that problems like this usually implicate a misbehaving

intermediate firewall appliance.

And so the cycle of blame was back on the Cisco… but why?

Let’s take a look at that tcpdump again.

15:48:37.752215 IP 10.68.58.2.53760 > 10.129.4.32.ssh: Flags [.], ack 36822, win 65535, options [nop,nop,TS val 511936353 ecr 1751514520,nop,nop,sack 1 {491353276:491354644}], length 0

15:48:37.752240 IP 10.129.4.32 > 10.68.58.2: ICMP host 10.129.4.32 unreachable - admin prohibited, length 72

ACK 36822, SACK 491353276:491354644… so segments 36823:491353276 are missing? That’s quite a jump. Surely 491316453 segments didn’t get sent in a few nanoseconds.

A Cisco support document holds the answer; or, at least, the beginning of one. By default, the Cisco firewall performs “TCP Sequence Number Randomization” on all TCP connections. That is to say, it modifies TCP sequence ids on incoming packets, and restores them to the original range on outgoing packets. So while the system receiving the SACK sees this:

15:48:37.752215 IP 10.68.58.2.53760 > 10.129.4.32.ssh: Flags [.], ack 36822, win 65535, options [nop,nop,TS val 511936353 ecr 1751514520,nop,nop,sack 1 {491353276:491354644}], length 0

…the system sending the sack/requesting the file sees this:

15:49:42.638349 IP 10.68.58.2.53760 > 10.129.4.64.ssh: . ack 36822 win 65535 <nop,nop,timestamp 511936353 1751514520,nop,nop,sack 1 {38190:39558}>

The receiver says “I have 36822 and 38190:39558, but missed

36823:38189.” The sender sees “I have 36822 and 491353276:491354644,

but missed 36823:491353275.” 491353276 is larger than the largest

sequence id sent by the provider, so iptables categorizes the packet

as INVALID.

But wait… if the Cisco is rewriting sequence ids, why is it only the

SACK fields that are different between the sender and the receiver?

If Cisco randomizes the sequence ids of packets that pass through the

firewall, surely the regular SYN and ACK id fields should be

different, too.

It’s a trick question: even though tcpdump reports 36822 on both

ends of the connection, the actual sequence ids on the wire are

different. By default, tcpdump normalizes sequence ids, starting at

1 for each new connection.

-S Print absolute, rather than relative, TCP sequence numbers.

The Cisco doesn’t rewrite SACK fields to coincide with its rewritten

sequence ids. The raw values are passed on, conflicting with the

ACK value and corrupting the packet. In normal situations (that is,

without iptables throwing out invalid packets) this only serves to

break SACK; but the provider still gets the ACK and responds with normal

full retransmission. It’s a performance degregation, but not a

catastrophic failure. However, because iptables is rejecting the

INVALID packet entirely, the TCP stack doesn’t even get a chance to

try a full retransmission.

Because TCP sequence id forgery isn’t a problem under modern TCP stacks, we’ve taken the advice of the Cisco article and disabled the randomization feature in the firewall altogether.

class-map TCP match port tcp range 1 65535 policy-map global_policy class TCP set connection random-sequence-number disable service-policy global_policy global

With that, the problem has finally disappeared.

$ scp 10.129.4.32:data Downloads data 100% 256MB 16.0MB/s 00:16 $ scp 10.129.4.32:data Downloads data 100% 256MB 15.1MB/s 00:17 $ scp 10.129.4.32:data Downloads data 100% 256MB 17.1MB/s 00:15

Nov. 13

I'm going to get wood for my mage's staff. It seems I have to go to a wood on the far side of Imperial City if I'm to obtain it. REally, these mages don't seem to have any trouble making everything into long and laborious tasks. I hope it shall be worth it.

On my way I discovered an invisible town with residents asking me to fix their problem for them. Of course. I suppose I shall return if I find I have nothing better to do.

I arrived at the cave for mage's staffs to discover the person I needed to find was dead and a necromancer in his place. I don't even know why I was surprised. No one in this place ever seems to have much of a handle on things. I found the man I needed dead, and also an unfinished staff. I guess there's nothing to do but take my news back to the University.

I took my staff back to the University and got a new fangled one which shoots fire! At least some usefulness has come of all this. I also sold off some more gear. I do wish there was a faster way to make money in this stupid country for someone like me who really doesn't have much time to settle down into anything in particular.

Fort Blueblood.

I have gone seeking after an amulet for the Leyawinn Recommendation for the Mage's guild. For Blueblood was full of Marauders and Mage's guarding the place. I am tired to continue on these annoying quests, but I hope that my goal will be well worth it. I found some chests of gold and fought off the inhabitants as I could.

Eventually I found that the body and Amulet I sought were guarded by a Mage angry with the leader at Lewalwinn. He wished merely to hide it from her in order to unseat her. How ridiculously petty and annoying. I am not sure why I should make a better Mage by completely tasks such as these for these people. At least the tasks are easy, if trivial and time wasting.

Now back to Dagail with her father's Amulet.

Dagail was very happy to receive the Amulet and to see an end to her visions. Yay for her, I suppose. One more recommendation is mine. On to the next banal quest for these useless people. I hope that becoming a mage does not make everyone so annoyingly trivial.

I sold off the Dwarven Armor which I had found in the Fort. I got a very nice price for it. I feel slightly mollified at that waste of time. I also went on to sell my other items and purchase.

I have reached the guild hall in Cheydenhall. I have been sent to retrieve a ring from a well behind the place. It is as if they had heard me complaining and decided to make their requests even more demanding and annoying. The guild leader is quite terse and seems to think this task is difficult. He probably threw zombies in the well or some such. This should be interesting, or at the very least, time wasting.

The ring was merely a trap to lure me into drowning in the well. It was a good thing that I had my magical water breathing necklace. I had to unburden myself of some of my things before I could pick up the amazingly heavy thing, but I managed it. Falcar, however, the good for nothing that assigned me the task has escaped without helping me as he said he might. I must either find a recommendation he left behind or seek the man himself to force his hand.

It seems that Deetre or whatever the woman's name is who ousted Falcar, is willing to write my recommendation herself. Upon finding black soul gems in the man's room and reporting to her, I was able to receive this promise and the assurance that it would be what I needed.

After I left the mage's guild, I found a man who's brother I had met awhile back. He rejoiced, not knowing that his brother was alive at all. He invited me to Chorrol to celebrate, and I intend to go and see if their is some sort of reward for supplying this information.

I went to Bruma. I found a woman there who sent me to quest after her and her lovers gold hoard. She wants me to get the location from him in jail and then reveal it to her. HA! More like get the location and never set foot near her again. Ah, gold.

Got the gold gold the gold! A guard in the jail double crossed everyone and killed off the woman for me. As both of the couple are out of the way, and a guard did all the evil I was free to help myself to the loot with no guilt or trouble. If only it had been more than a measly 40 coin.

Fjotreid in Bruma has an 81 point disposition with me. It is a good place to sell my goods.

On to the Bravil Mage's guild. I hope I shall not have to do to many more of these quests…I had to restore a staff that a lovestruck mage stole from his woman, blah blah blah. I Beguiled the man who bought it in Imperial city and bought it back. I hate that I gave up 200 good gold for this. This recommendation had really better be worth it now.

Now I'm helping the Mage Guild leader find a friend. Why do I do these things? He is trapped in a dream world and I must release him. What is the solution? It seems I most join him in the dreamworld. I am not sure why his friend could not do it, but this task is at least, interesting.

I got the man out of his dream. Blech, barely a reward. On to Arcane University!

This page, at the time of writing, is served by a virtual machine running at linode. Nothing special: just Debian 6. The node is more than just an Apache server: I connect to it via ssh a lot (most often indirectly, with Git).

As long as I can remember, there’s been a noticeable delay in establishing an ssh connection. I’ve always written this off as one of the side-effects of living so far away from the server; but the delay is present even when I’m in the US, and doesn’t affect latency as much once the connection is established.

Tonight, I decided to find out why.

First, I started a parallel instance of sshd on my VM, running on an

alternate port.

# /usr/sbin/sshd -ddd -p 2222

Then I connected to this port from my local system.

$ ssh -vvv ln1.civilfritz.net -p 2222

I watched the debug output in both terminals, watching for any obvious delay. On the server, that delay was preceded by a particular log entry.

debug3: Trying to reverse map address 109.171.130.234.

The log on the client was less damning: just a reference to checking the assumable locations for ssh keys.

debug2: key: /Users/janderson/.ssh/id_ecdsa (0x0)

I confirmed that the address referenced by the server was one of the

external NAT addresses used by my

ISP. Presumably

sshd or PAM is trying to determine a name for use in authorization

assertions.

At that point, I set out to understand why there was a delay reverse-mapping the address. Unsurprisingly, KAUST hasn’t populated reverse-dns for the address.

$ time host 109.171.130.234 Host 234.130.171.109.in-addr.arpa. not found: 3(NXDOMAIN) real 0m0.010s user 0m0.004s sys 0m0.005s

That said, getting the confirmation that the reverse-dns was unpopulated did not take the seconds that were seen as a delay establishing an ssh connection.

I had to go… deeper.

# strace -t /usr/sbin/sshd -d -p 2222

Here I invoke the ssh server with a system call trace. I included timestamps in the trace output so I could more easily locate the delay, but I was able to see it in real-time.

00:27:44 socket(PF_FILE, SOCK_STREAM, 0) = 4

00:27:44 fcntl64(4, F_GETFD) = 0

00:27:44 fcntl64(4, F_SETFD, FD_CLOEXEC) = 0

00:27:44 connect(4, {sa_family=AF_FILE, path="/var/run/avahi-daemon/socket"}, 110) = 0

00:27:44 fcntl64(4, F_GETFL) = 0x2 (flags O_RDWR)

00:27:44 fstat64(4, {st_mode=S_IFSOCK|0777, st_size=0, ...}) = 0

00:27:44 mmap2(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0xb7698000

00:27:44 _llseek(4, 0, 0xbf88eb18, SEEK_CUR) = -1 ESPIPE (Illegal seek)

00:27:44 write(4, "RESOLVE-ADDRESS 109.171.130.234\n", 32) = 32

00:27:44 read(4, "-15 Timeout reached\n", 4096) = 20

00:27:49 close(4) = 0

That’s right: a five second delay. The horror!

Pedantic troubleshooting aside, the delay is introduced while reading

from /var/run/avahi-daemon/socket. Sure enough, I have

Avahi installed.

$ dpkg --get-selections '*avahi*' avahi-daemon install libavahi-client3 install libavahi-common-data install libavahi-common3 install libavahi-compat-libdnssd1 install libavahi-core7 install

Some research that is difficult to represent here illuminated the fact that Avahi was installed when I installed Mumble. (Mumble might actually be the most-used service on this box, after my use of it to store my Org files. Some friends of mine use it for voice chat in games.)

I briefly toyed with the idea of tracking down the source of the delay in Avahi; but this is a server in a dedicated, remote datacenter. I can’t imagine any use case for an mDNS resolver in this environment, and I’m certainly not using it now.

# service stop avahi-daemon

Now it takes less than three seconds to establish the connection. Not bad for halfway across the world.

$ time ssh janderson@ln1.civilfritz.net -p 2222 /bin/false real 0m2.804s user 0m0.017s sys 0m0.006s

Better yet, there are no obvious pauses in the debugging output on the server.

Avahi hooks into the system name service using nsswitch.conf, just

as you’d expect.

$ grep mdns /etc/nsswitch.conf hosts: files mdns4_minimal [NOTFOUND=return] dns mdns4

Worst case I could simply edit this config file to remove mDNS; but I

wanted to remove it from my system altogether. It should just be

“recommended” by mumble-server, anyway.

# aptitude purge avahi-daemon libnss-mdns

With the relevant packages purged from my system, nsswitch.conf is

modified (automatically) to match.

$ grep hosts: /etc/nsswitch.conf hosts: files dns

Problem solved.

Unexpectedly, I find myself in Riyadh tonight. This is my first trip here, though so far the sum total of my experience has been airport, taxi, and hotel.

I hope that I manage to see something uniquely Riyadh during the trip, though I will not be surprised if my journey is a sequence of point-to-point trips, with no time in the actual city. A shame.

The story, so far as I know it, is that we (my team lead, Andrew Winfer, and I) have been called out as representatives of KAUST to assist KACST in the configuration of their Blue Gene/P. They have a single rack (4096 PowerPC compute cores, likely with four terabytes of memory disributed among them). As I understand it, KACST is structured much like a national laboratory, and this system is being managed by the group of research scientists using it. Apparently they haven't been terribly pleased with the system thus far; but a Blue Gene is a bit different from a traditional cluster, and those differences can be confusing at first.

I hope we will be able to assist them. More exciting, though, is the possibility that this is the first in a series of future collaborations between our two institutions.

Of course, I haven't been to KACST yet: we only just arrived in Riyadh at 22:00. I'm procrastinating sleep with the trickle of Internet available in my room.

KAUST has put us up in the Brzeen Hotel. (I'm giving up on trying to isolate a correct Arabic spelling.) The room is perfectly servicable, if a bit barren; but overshadowing everything else is the size of it all.

Anyway: I expect there will be more interesting things to say tomorrow.

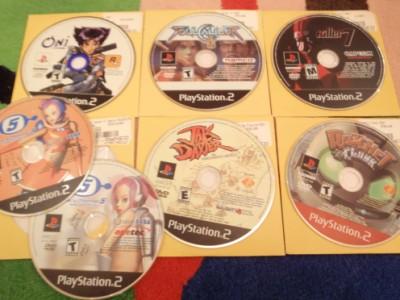

During my recent trip to the states I visited with family and friends; traveled via plane, bus, and car; wandered the streets of Chicago; and climbed [[!wikipedia Medicine_Bow_Peak desc="the highest peak in the snowy range"]]. But today I'm going to talk, in stead, about the pile of games that I acquired.

GameStop has apparently decided to get out of the used PS2 market. I can hardly blame them: they have an incredible inventory with a low signal-to-noise ratio. The Illinois stores that I visited all had "buy two, get two free" sales ongoing, and the Wyoming stores still had lots of boxed and unboxed games at pretty low prices.

I only got my PS2 in 2009 while I was stuck in hotel rooms waiting to move to Saudi. There's a lot of good PS2 games that I have yet to play, and the huge GameStop inventory means I'm bound to find at least a few good games. The aforementioned low signal-to-noise ratio meant that I spent a lot of time leafing through the same Guitar Hero, sports, and racing games. That said, I almost never left a store empty-handed.

I also picked up a few DS games. I ordered Dragon Quest V and Retro Game Challenge from Amazon before we left the kingdom: Dragon Quest V has always interested me since I read Jeremy Parish's writeups on GameSpite and 1UP; and I played Retro Game Challenge back at Argonne with Daniel and Cory, but... less than legitimately.

I've been tangentially aware of the Professor Layton series for a while, but never with any detail. I originally thought it was some kind of RPG, but my interest waned a bit in the face of its more traditional puzzle structure. Andi took to them quickly, though: she's already finished "The Curious Village," and we got a copy of "The Diabolical Box," too.

Like I said, I've come to the PS2 a bit late, so I'm doing what I can to go back and visit the classics. I found boxed copies of "Metal Gear Solid 2: Substance" and "Metal Gear Solid 3: Subsistence"... awesome! I've already started enjoying MGS2 (though I must admit that I have been disappointed by Raiden in comparison with Solid Snake, even without any preconceptions or hype.) I later found out that Subsistence contains ports of the MSX versions of "Metal Gear" and "Metal Gear 2: Solid Snake." Very cool: now I won't have to suffer through the horrible NES localization.

I heard about "Space Channel 5" on Retronauts' Michael Jackson episode. Knowing that it (and its "Part 2") were just forty minutes each, I tackled those first thing when I got home. Aside from possibly Incredible Crisis, these are the most Japanese games I've ever played. The first in the series had a few technical flaws (at least, the PS2 port I got did), most off-putting being the incredibly picky controls. That was cleaned up in the second game, along with the VCD-quality pre-rendered backgrounds from the Dreamcast original.

I jumped on a disc-only copy of Suda51's "Killer7" on Yahtzee's recommendation: "As flawed as it is, get it anyway, because you'll never experience anything else like it." I haven't even put this one in the console, yet, but the last game I played on a Zero Punctuation recommendation was Silent Hill 2: one of the best games I've ever played. I don't expect Killer7 to be as significant (or even comprehensible) as that, but it should at least be interesting.

A copy of "Oni" seemed familiar, but only in the back of my mind. Turns out it's pre-Halo Bungie. (Actually, Bungie West.) The Marathon influence is obvious, but the default controls are really twitchy. Hopefully I can adjust the sensitivity a bit when I come back to it.

I didn't have any multiplayer PS2 games yet, so I picked up copies of "Soul Calibur II" and "Gauntlet: Dark Legacy" for parties. Of course, I forgot that I only have one PS2 controller. Hopefully I can pick up few more up along with a four-player adapter. (Otherwise, what's the point of Gauntlet?) Soul Calibur is as well-made as I expected (though the disc is FMV-skippingly scratched); but Gauntlet comes off as a bit cheap. The gameplay seems good enough: it just doesn't feel as classic as it deserves.

I keep hearing mention of the "Ratchet and Clank" series, but I haven't touched a PlayStation platformer since MediEvil. It hasn't really caught my attention yet, but maybe "Jak and Daxter" will.

I picked up a copy of "Batman Begins," but I apparently had it confused with "Arkham Asylum." "Tokobot Plus" was actually a "new" game, but priced down such that you couldn't tell. I guess it didn't sell well: but it reminds me of the "Mega Man: Legends" world. Hopefully it will, at least, be interesting.

All told I got twelve games (fourteen if you count the MSX games included with Subsistence). [edit: turns out that I only got disc 1, so no MSX games.] Despite Batman's misdirection, I think I did alright--all for less than you'd spend on two current-generation games--and not one generic brown FPS in the lot.